Distributed multi-agent navigation faces inherent challenges due to the competing requirements of maintaining safety and achieving goal-directed behavior, particularly for agents with limited sensing range operating in unknown environments with dense obstacles. Existing approaches typically project predefined goal-reaching controllers onto control barrier function (CBF) constraints, often resulting in conservative and suboptimal trade-offs between safety and goal-reaching performance.

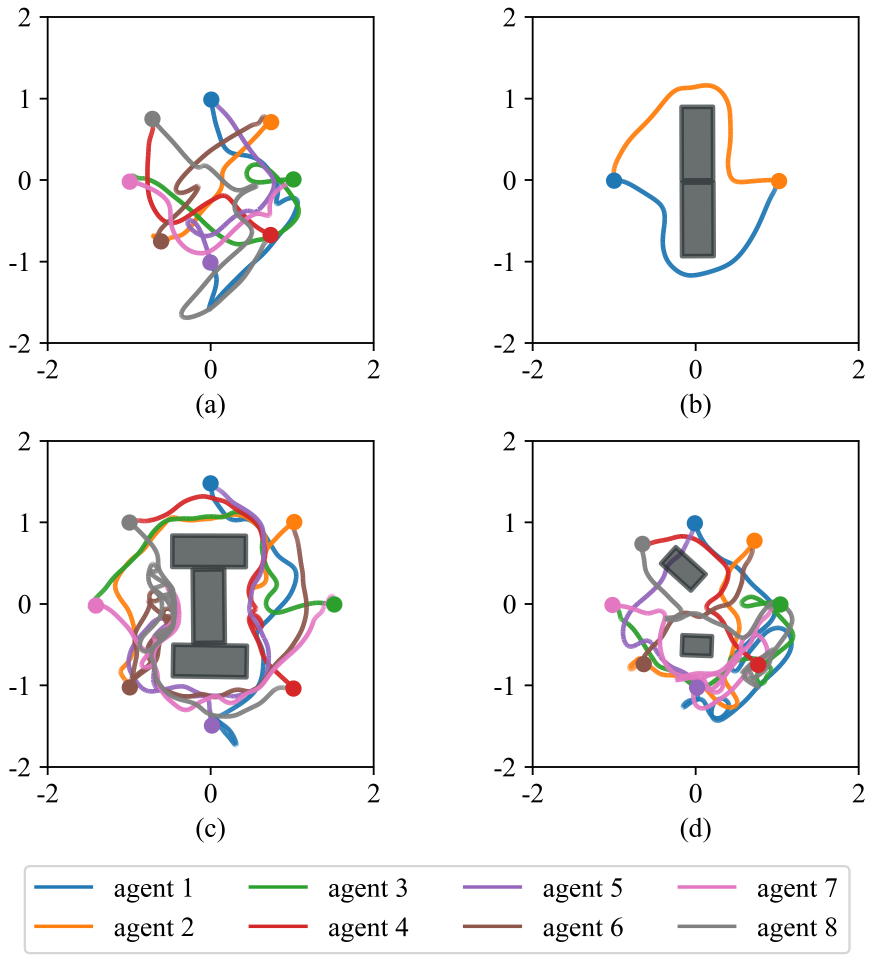

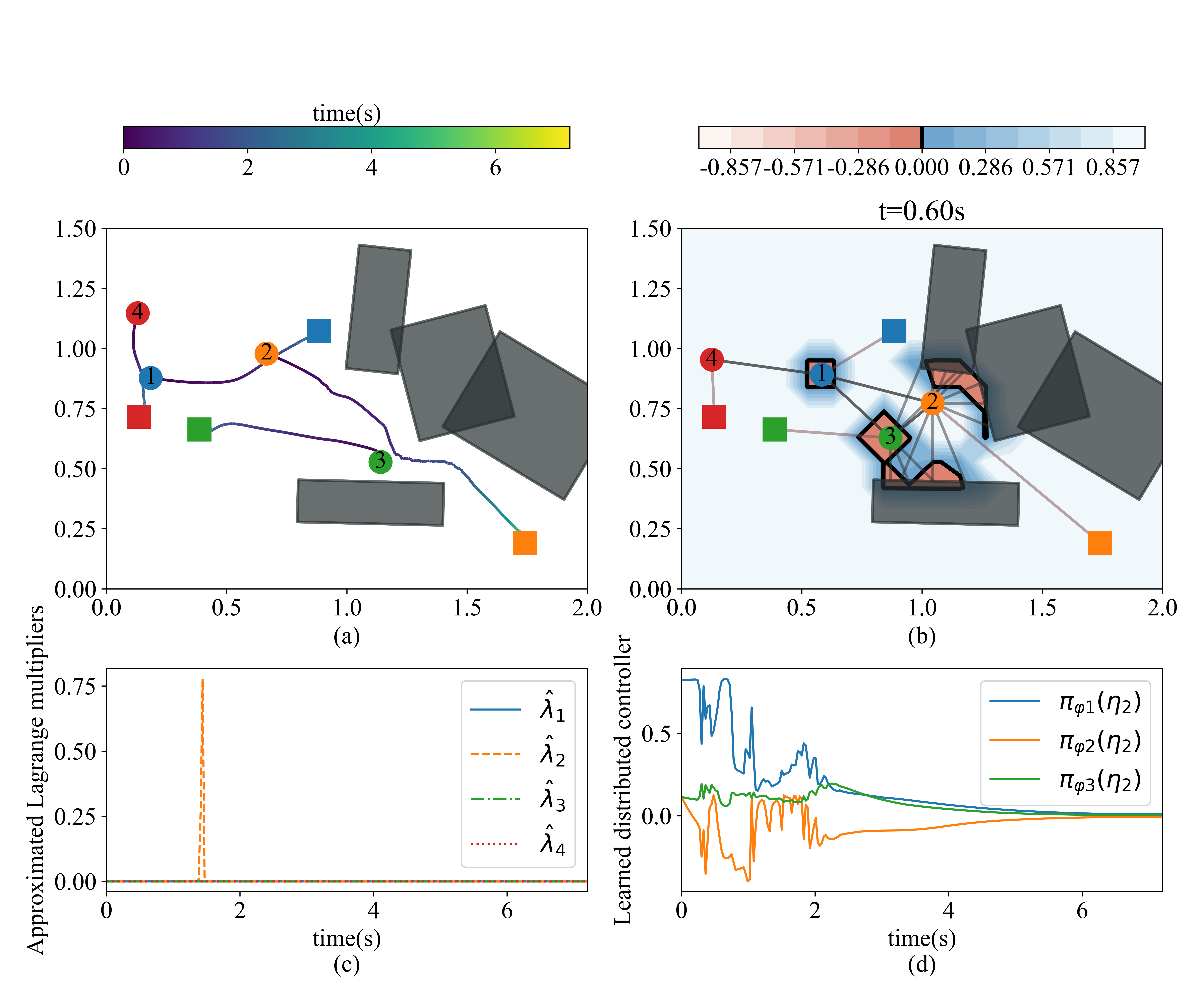

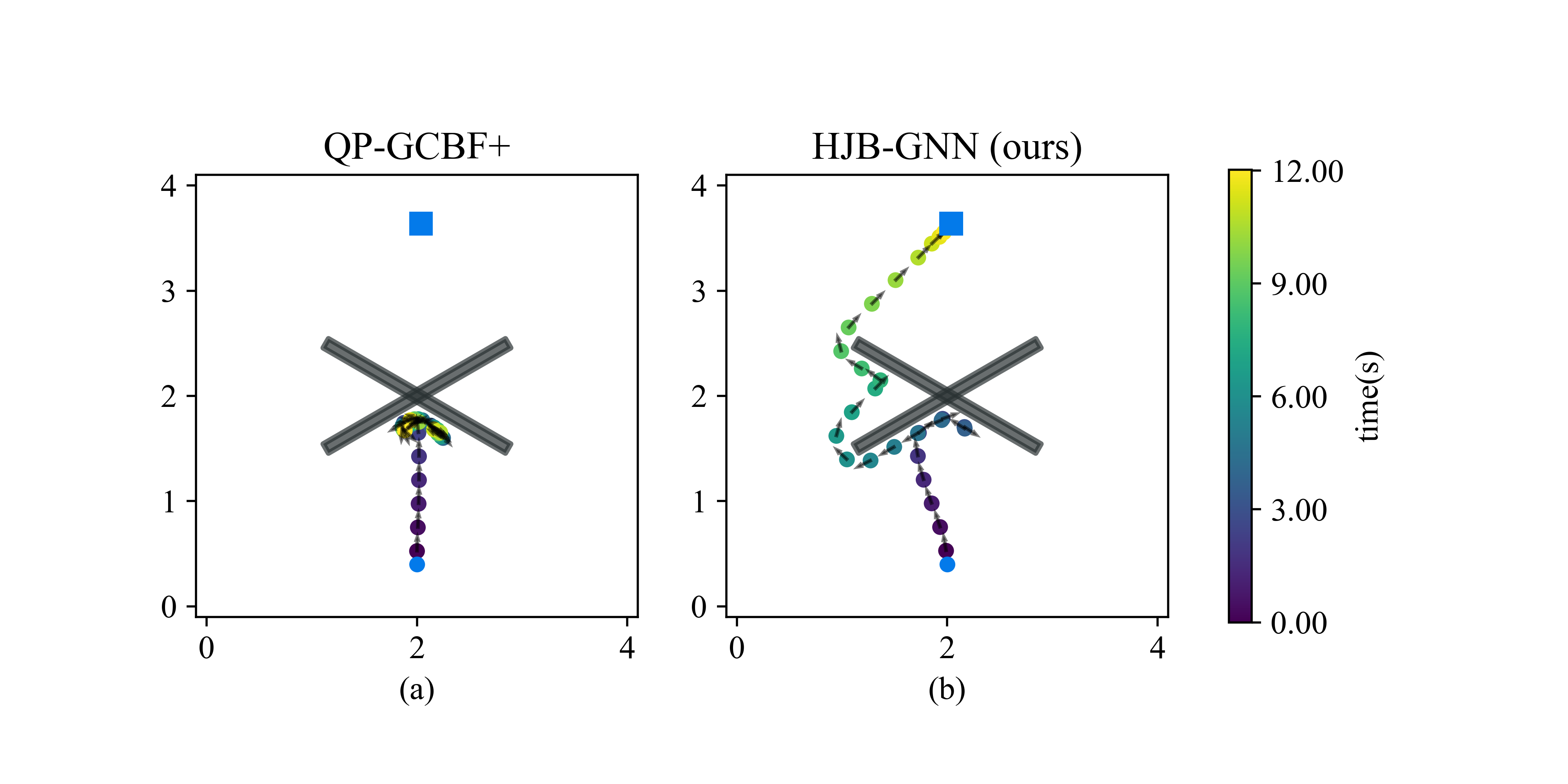

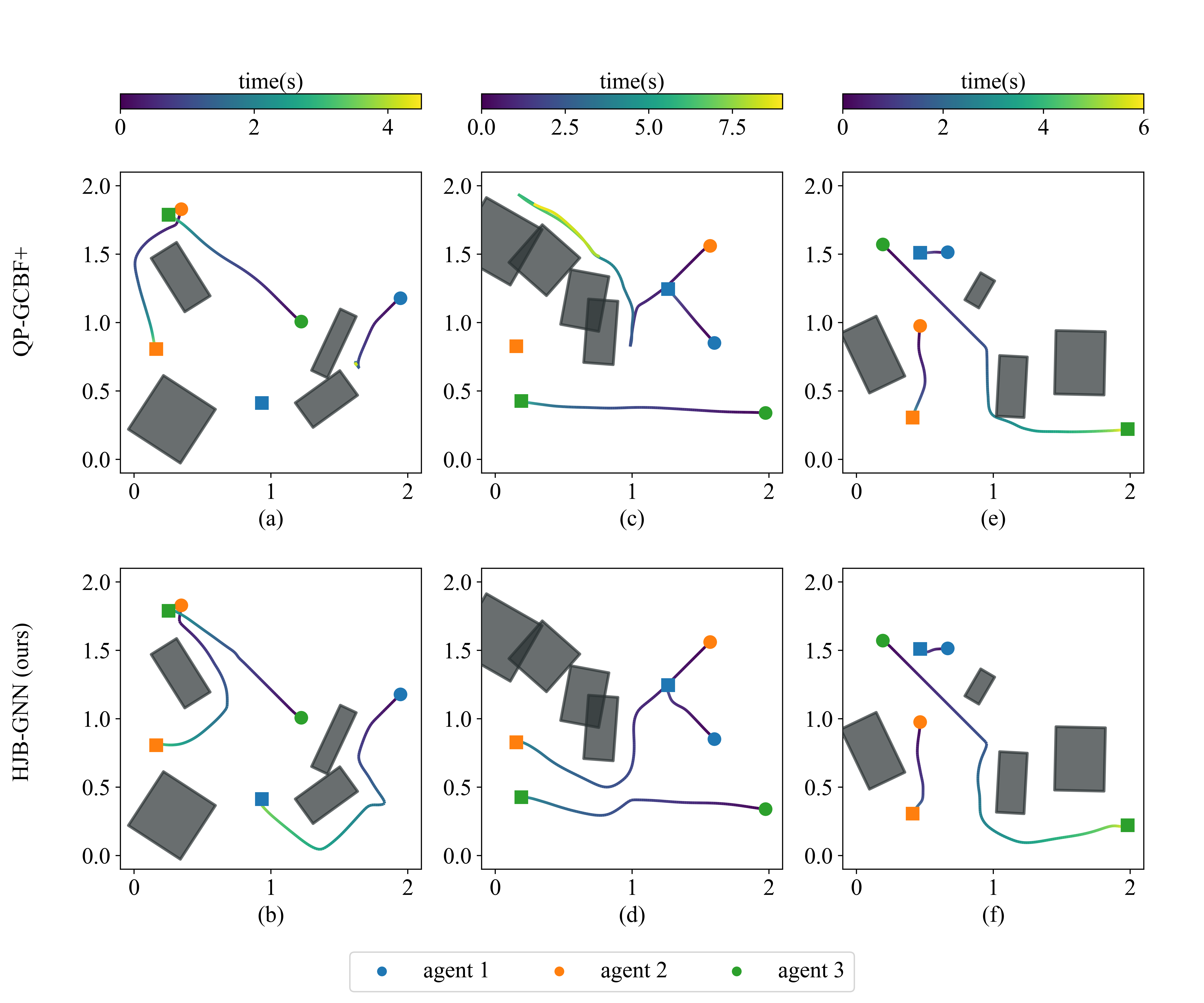

We propose an infinite-horizon CBF-constrained optimal graph control formulation for distributed safe multi-agent navigation. By deriving the analytical solution structure, we develop a novel Hamilton-Jacobi-Bellman (HJB)-based learning framework to approximate the solution. In particular, our algorithm jointly learns a CBF and a distributed control policy, both parameterized by graph neural networks (GNNs), along with a value function that robustly guides agents toward their goals. Moreover, we introduce a state-dependent parameterization of Lagrange multipliers, enabling dynamic trade-offs between safety and performance. Unlike traditional short-horizon, quadratic programming-based CBF methods, our approach leverages long-horizon optimization to proactively avoid deadlocks and navigate complex environments more effectively.

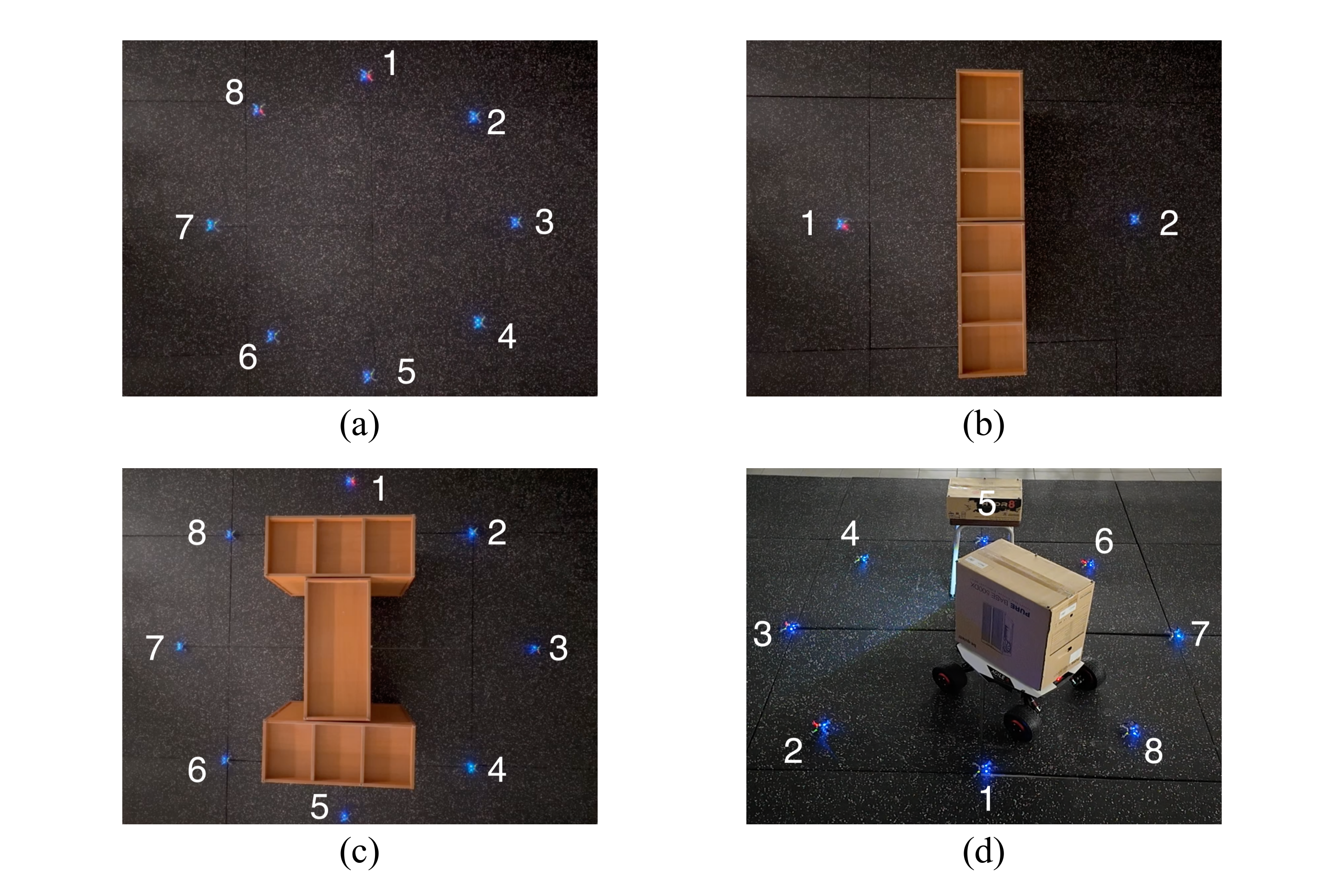

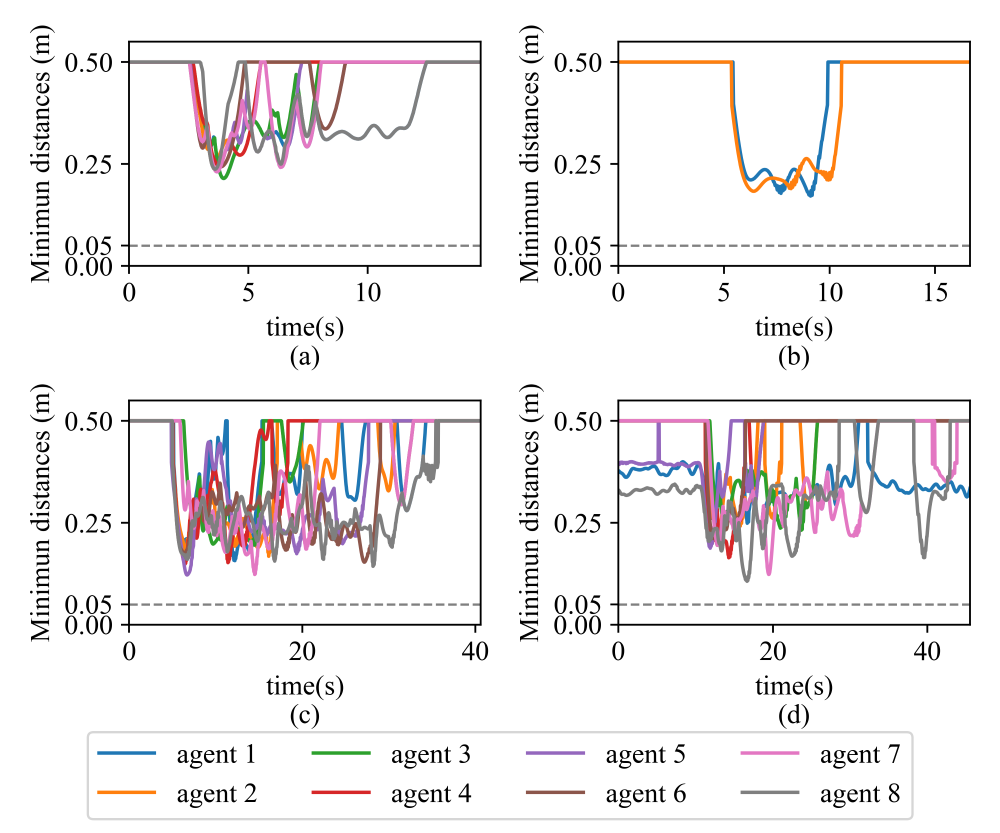

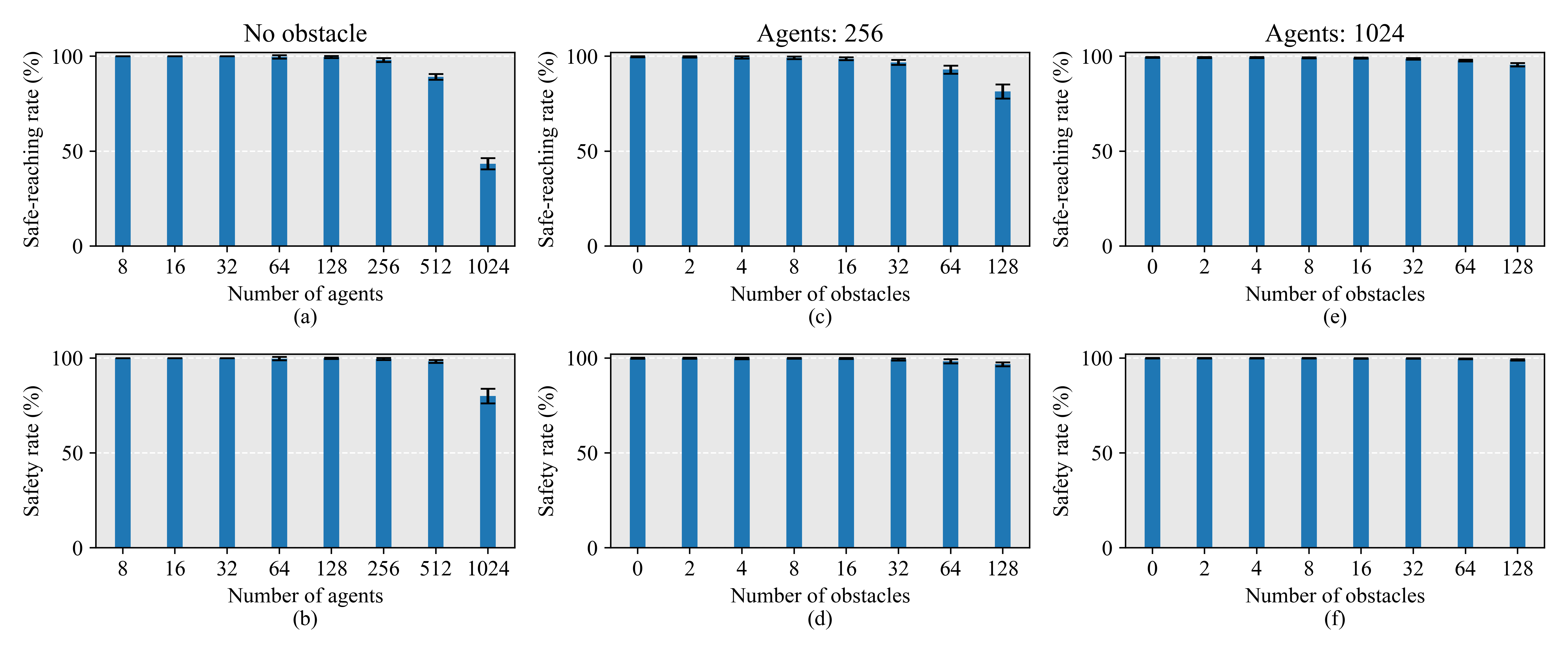

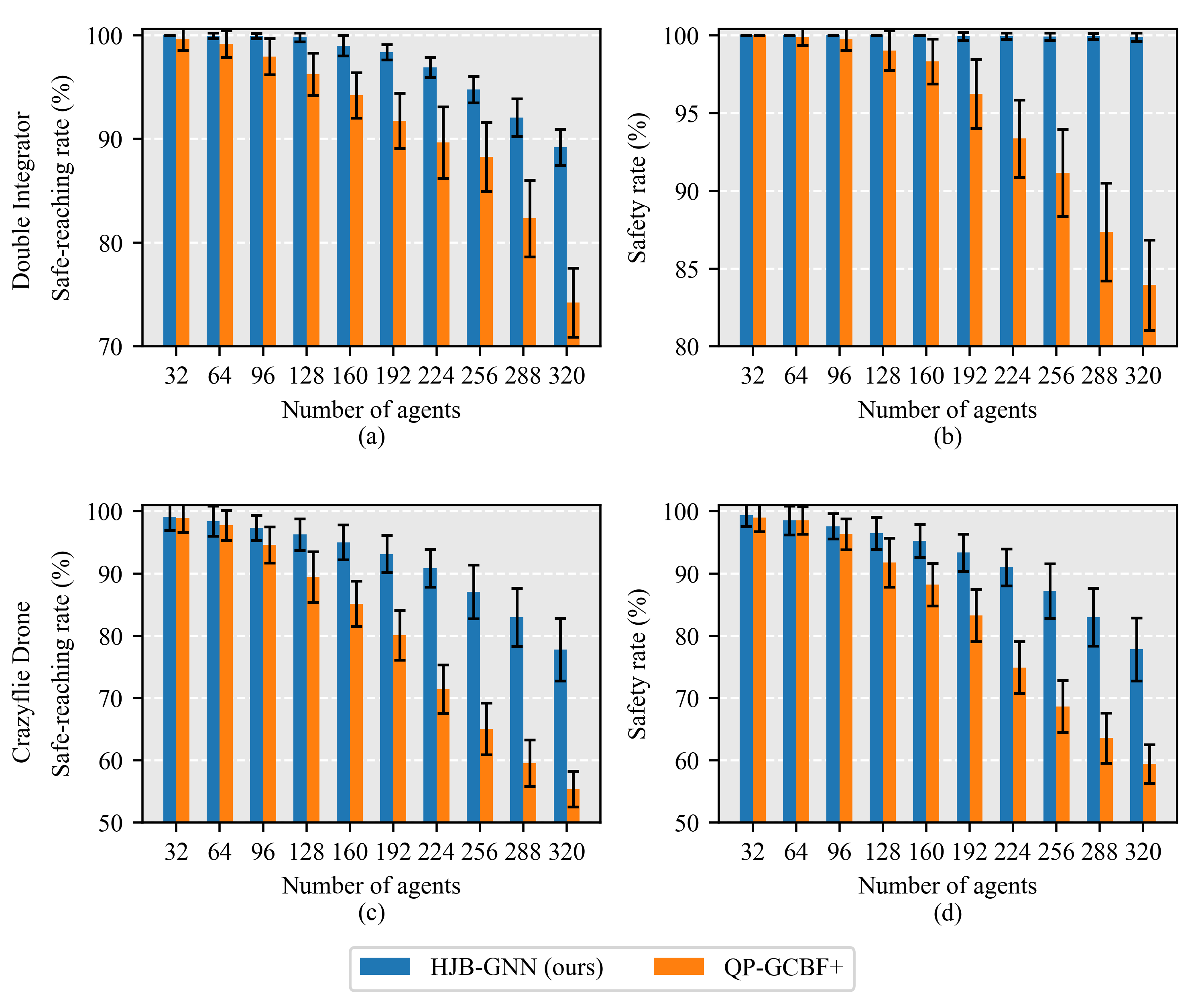

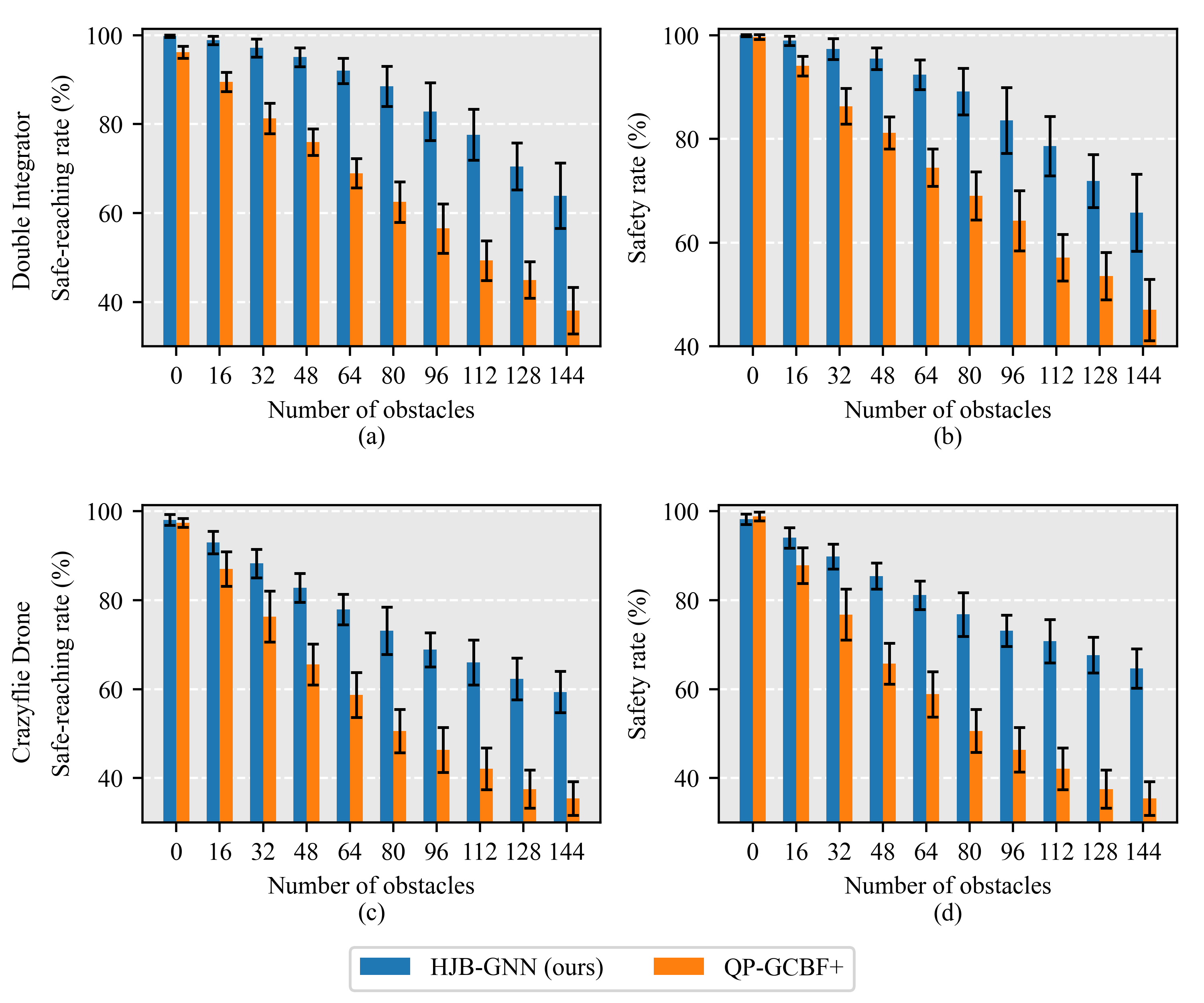

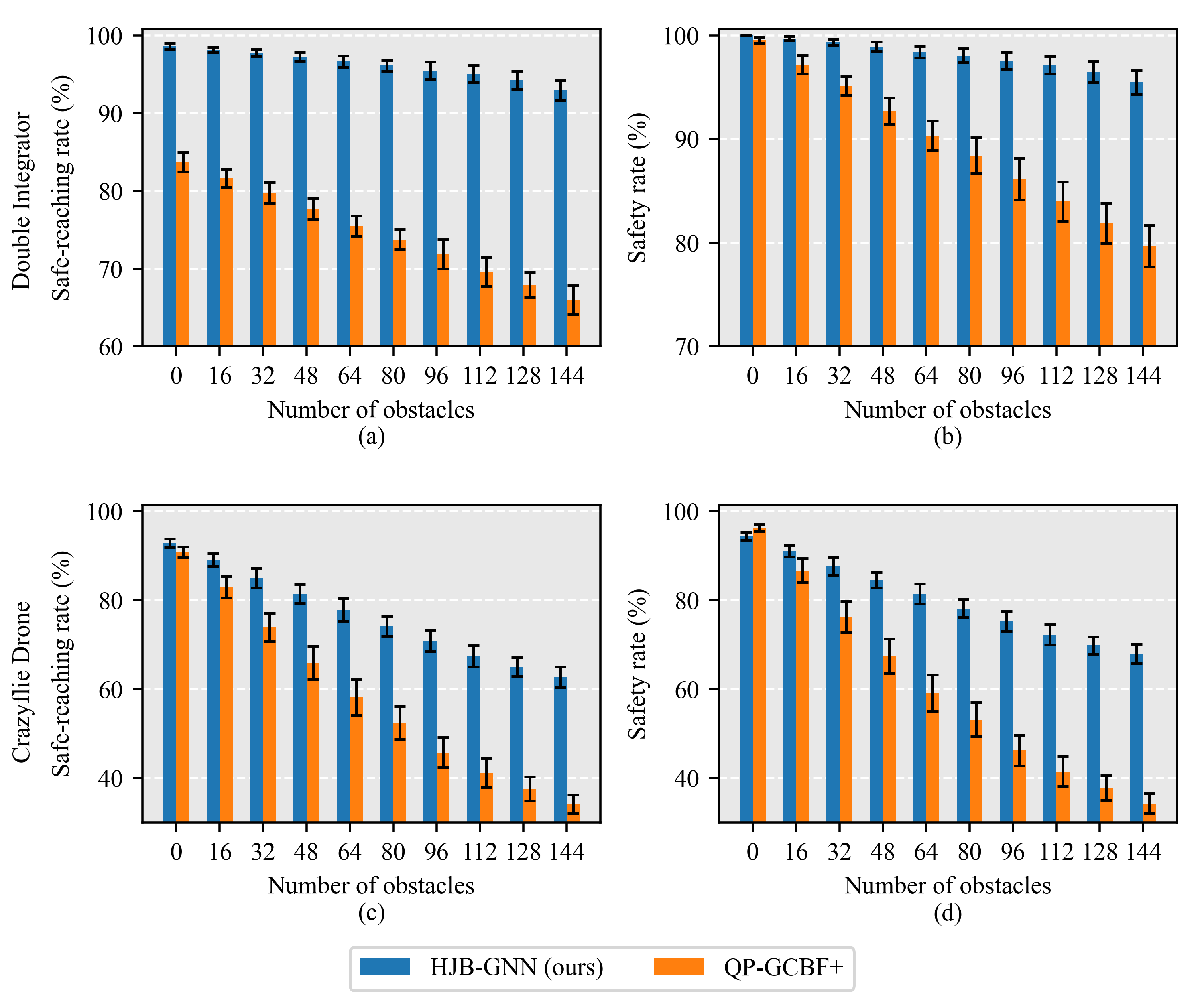

Extensive simulation results demonstrate substantial improvements in safety and task success rates across various agent dynamics, with strong scalability and generalization to large-scale teams in previously unseen environments. Real-world experiments using Crazyflie drone swarms on challenging antipodal position-swapping tasks further validate the practicality, generalizability, and robustness of the proposed HJB-GNN learning framework.